A Tale Of Two Arrays

...or, another obscure rant regarding outdated hardware

Recently I have been mucking around with an old Asus P5Q motherboard. I have tested a lot of the RAID functionality, in different ways, and it was kind of new to me how everything was connected and I can see on the net that others are confused, too. Let me try explaining how it works.

The board has two SATA controllers. The first is an Intel chipset ICH10R solution, with six ports, which is fairly generous. The other controller is a Marvell 6111 (88SE6111) which is a "single-port SATA II Controller and single-port PATA133 interface". This controller drives the PATA (IDE) interface with a maximum of two drives, and the single SATA port. This controller is not a RAID controller but you could easily believe it to be. You can't even get to the physical SATA port of this controller. "Wait, what, why?" you ask.

The single SATA port of the Marvell 6111 feeds into a Silicon Image "SteelVine" Sil5723, which ASUS calls "Drive Xpert technology". This is kind of a bridge chip, that looks (to the controller) as a normal SATA drive but has the ability to create RAID sets in different configurations. This chip is connected to the two non-Intel SATA ports you see on the motherboard (orange/white). It transparently "translates" the Marvell controller into RAID modes.

You can configure this "SteelVine" controller into RAID0 and RAID1 from the BIOS, or even more modes from Windows software. I have only tested RAID0/RAID1, as the other modes (some resembling Intel Matrix RAID, combining RAID0/1 on a single set) are not interesting to me. ASUS software will only enable RAID0/1, I think, but you can download the "SteelVine Manager" from the support section at Silicon Image.

The thing is, the Marvell controller is a SATA controller. It needs no RAID driver. The SiL SteelVine chip looks like a single drive to the Marvell controller (I think the "normal" mode, or possibly JBOD, acts as a port multiplier and thus exposes two drives, but I would personally steer clear of this mode), and does not need a driver at all, neither RAID nor SATA.

So, how does this work in practice? It's OK I think. RAID0 performance is nothing to write home about (maxes out at 120MB/sec with two Velociraptors), but RAID1 is OK (not worse than single drive performance). A problem is that the SteelVine controller hides raw SMART info, you cannot access it from the SteelVine manager. This is a common problem with many cheaper RAID solutions.

A single drive from a SteelVine RAID1 array can be used on the ICH10R if you set the chipset to non-RAID (legacy, not AHCI). The reason is that ICH in RAID mode will detect the SteelVine disks as a native Intel array (!) but will refuse booting from them. Of course, setting the ICH to non-RAID might drop your other arrays, so be careful! However, ICH10R has no NVRAM to remember configuration, it looks at the disks to determine array membership, so you can remove the other array disks completely from the system while doing non-raid stuff.

I used the following procedure to migrate from a SteelVine RAID1 array to an identical array (but with faster disks) on the same controller:

Benchmarking

TL;DR: Soooo after all this talk, let's see how this performs. Do note that the P5Q bios was updated with this awesome BIOS mod which includes later Intel RAID code than the stock BIOS. I used the "P5Q Series 5th Anniversary FINAL mBIOS" to get the Intel 10.1 OROM, which is the best for RAID. Works fine. This doesn't affect the SteelVine, of course. CPU is a Q9505S (special 65W TDP quad core).

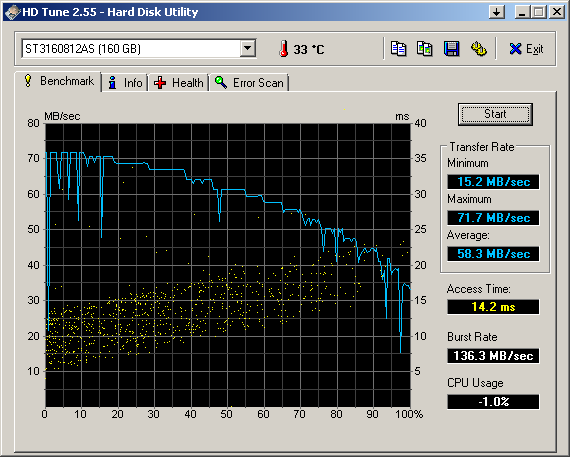

Let's start with a standard HDTune test of a standard (old) disk on the ICH10R in IDE mode. This is typical performance for an older disk (Seagate 160GB 7200.9), notice the curve shape (representing that the disk gets slower towards the centre of the platters) and the plateaus in the curve (representing zoning of the disk). SteelVine performance in RAID1 with two such disks is pretty much the same.

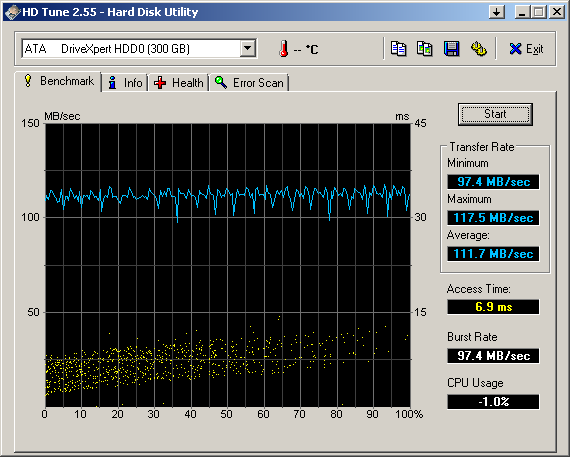

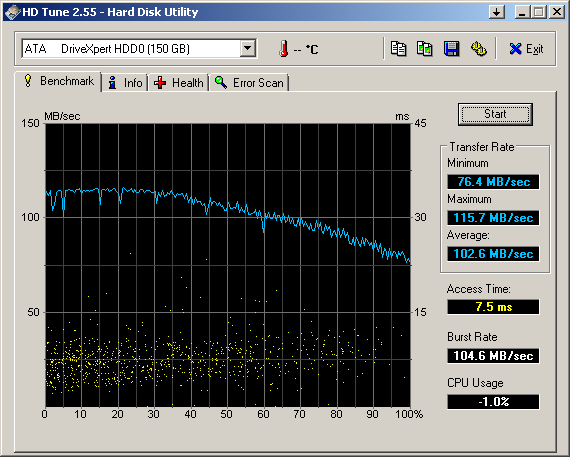

This is two Western Digital Velociraptors (WD1500HLFS, FW 04.04.V06) in a RAID0. As you can see, the "bandwidth curve" is flat, indicating that the controller limits performance severely. However, the seek time scatter plot has a slope that indicates normal operation, namely that random seeks have a penalty at the center of the disks. The bandwidth at the edges should be about twice this; if it's the SteelVine or the Marvell it is attached to, I don't know, but running a RAID0 on this controller is not a good choice.

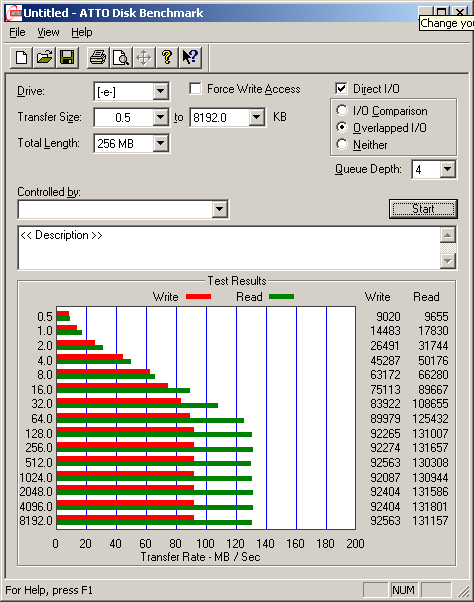

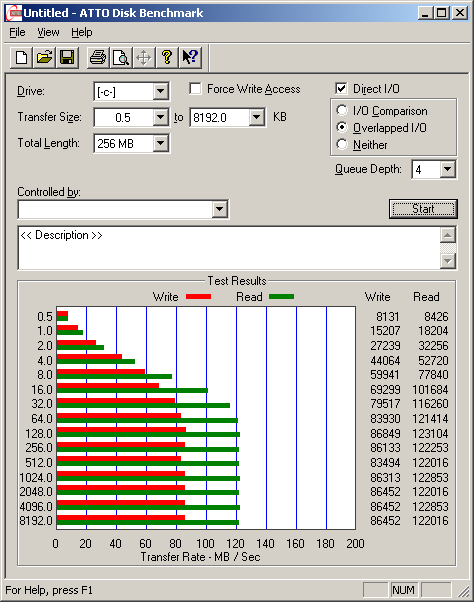

Same RAID0 setup in ATTO.

The same disks in a RAID1. As you can see, the curve is much more natural. In fact, this is pretty much single-disk Velociraptor performance (which you can verify from reviews of the disk) so the Marvell/SteelVine isn't making anything worse.

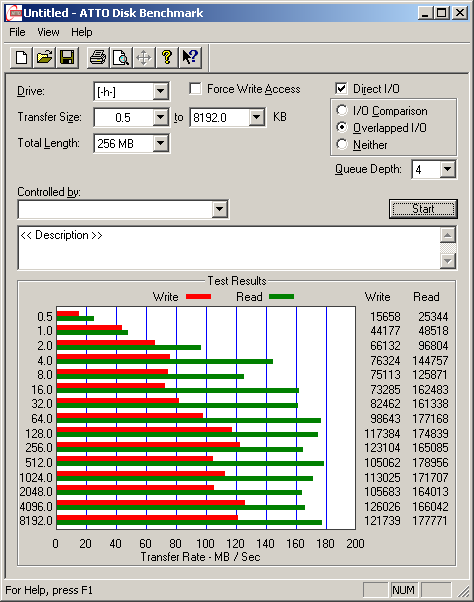

Same RAID1 setup in ATTO.

Bonus benchmarks!

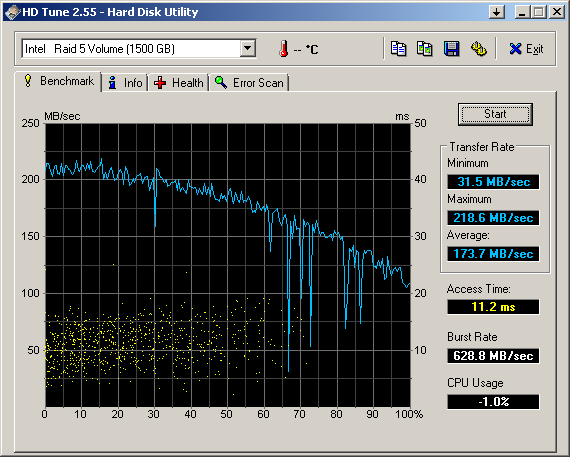

This is not the SteelVine but a four-disk RAID5 array on the ICH10R. Three of the disks are Seagate 500GB 7200.10, one is a 500GB Western Digital Black. Stripes are 64k, NTFS clusters are also 64k, and write caching is on in the Intel console.

Same Intel RAID5 in ATTO. Great performance, methinks, much better than most budget-to-midpriced "hardware" cards; on-board RAID clearly has a place in the arsenal of tools, at least for Windows.